↗

↗

↗

↗

‹

›

×

‹

›

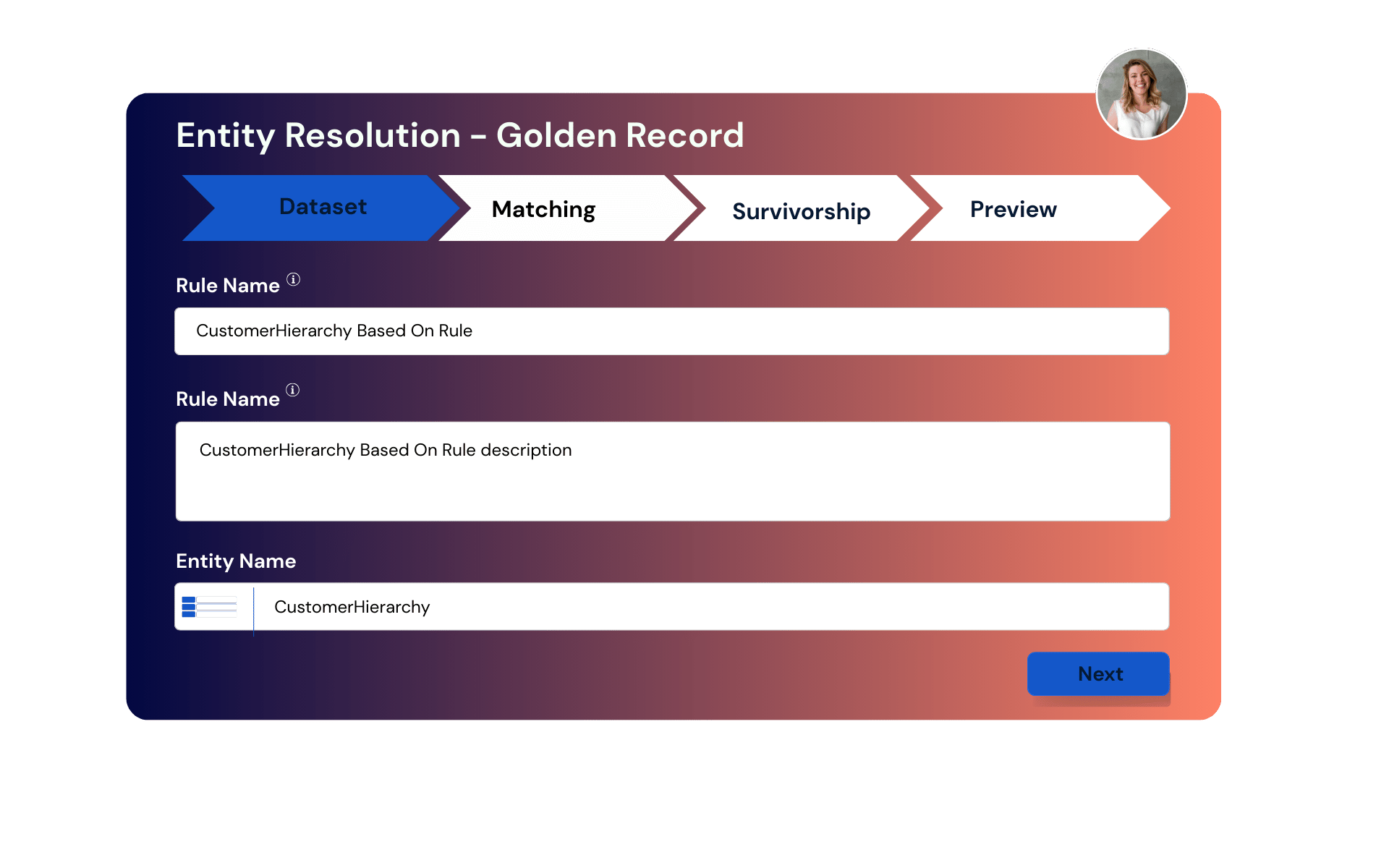

AI Powered MDM & Entity Resolution

4DAlert enhances MDM with Match & Merge to connect multi-domain records, eliminate duplicates, and ensure accuracy. Its Survivorship logic prioritizes the most reliable source for master records. Pre-built Content for SAP & Salesforce ensures seamless integration across key platforms. AI-Powered MDM enables smarter, automated data management, improving data quality over time.